第一作者论文

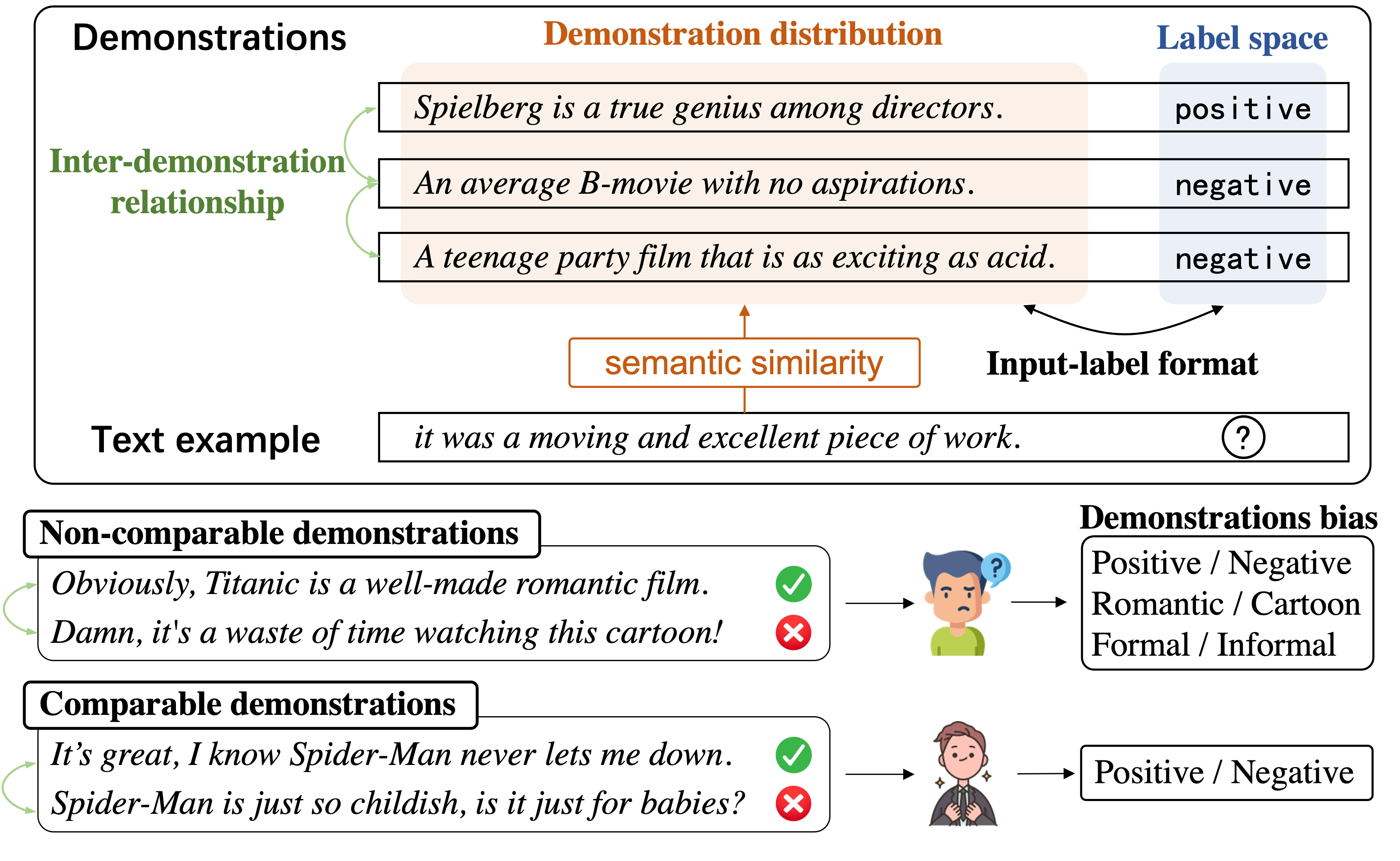

Caoyun Fan, Jidong Tian, Yitian Li, Hao He, Yaohui Jin. "Comparable Demonstrations are Important in In-Context Learning: A Novel Perspective on Demonstration Selection", ICASSP 2024 ORAL (12%) (CCF-B). [PDF], TL,DR: Large Language Models may misunderstand the task's essence due to the limited number of demonstrations in In-Context Learning, and Comparable Demonstrations can significantly alleviate this situation.

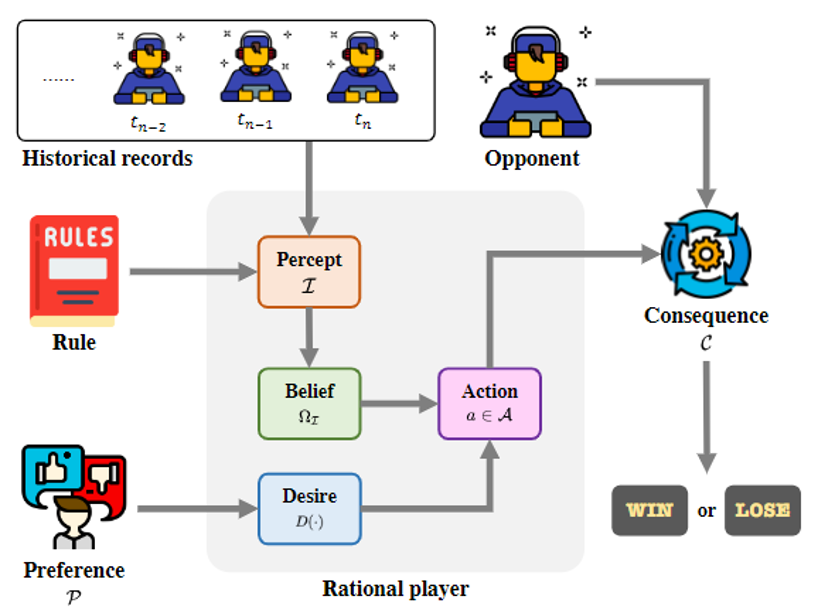

Caoyun Fan, Jindou Chen, Yaohui Jin, Hao He. "Can Large Language Models Serve as Rational Players in Game Theory? A Systematic Analysis", AAAI 2024 ORAL (1.9%) (CCF-A). [PDF], TL,DR: We select three classic games (dictator game, Rock-Paper-Scissors, and ring-network game) to analyze to what extent LLMs can achieve rationality, and we find that even the current state-of-the-art LLM (GPT-4) exhibits substantial disparities compared to humans in game theory.

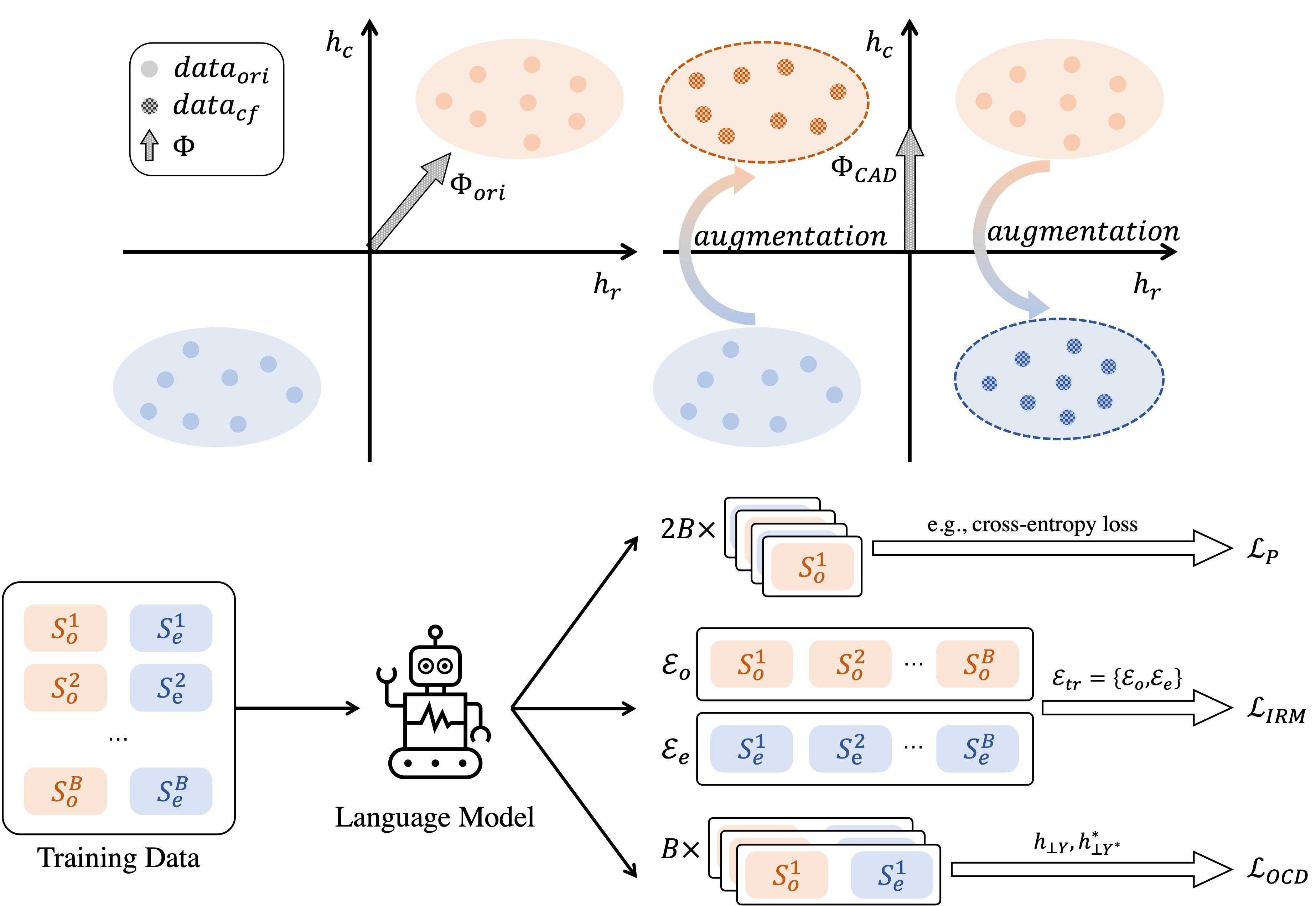

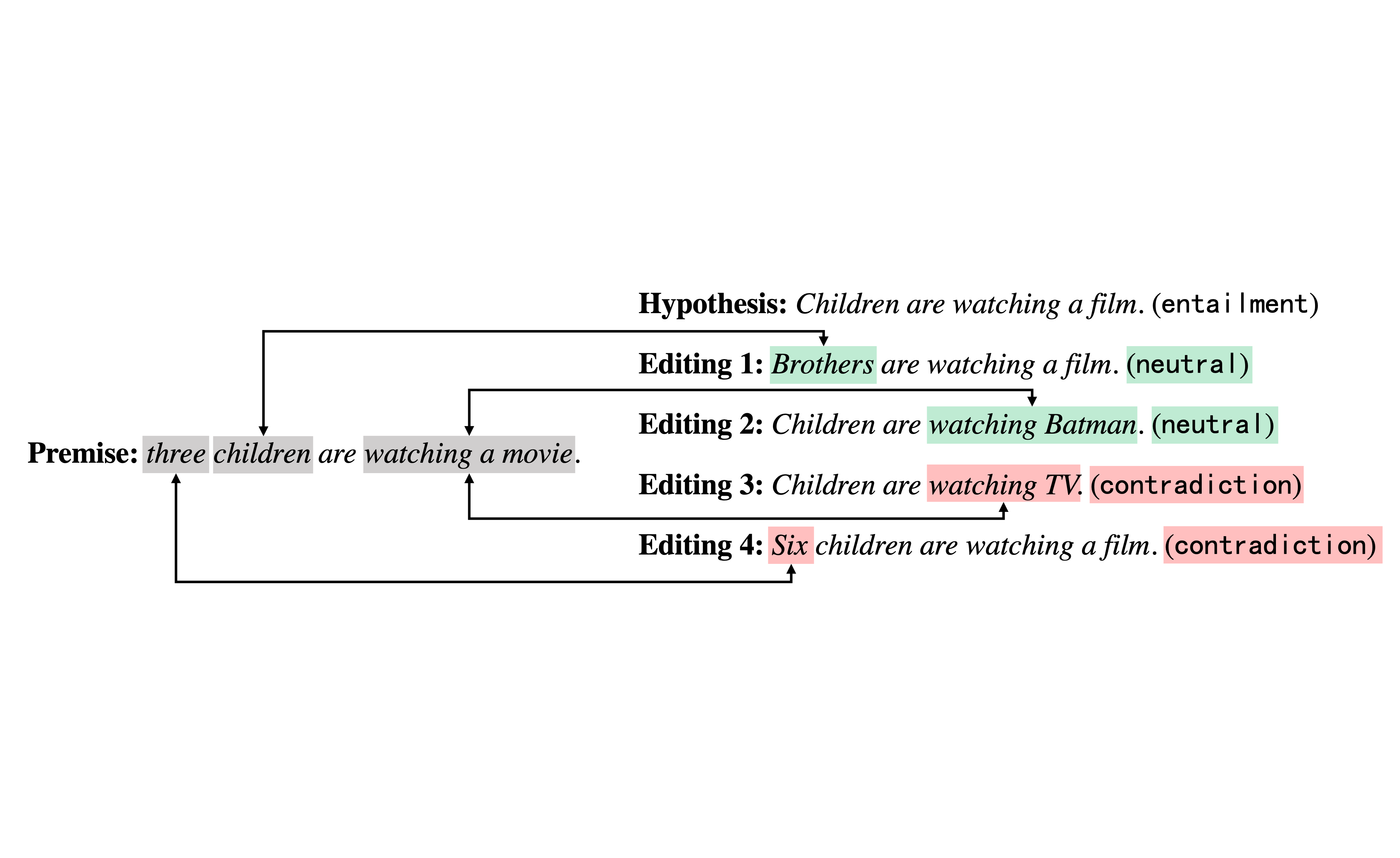

Caoyun Fan, Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin. "Unlock the Potential of Counterfactually-Augmented Data in Out-Of-Distribution Generalization", Expert Systems With Applications 2024 (SCI 一区,IF:8.5). [PDF], TL,DR: We theoretically demonstrate two perspectives of using Counterfactually-Augmented Data (CAD) to improve the Out-Of-Distribution (OOD) generalization ability of language models, and propose corresponding constraints, which we call the ECF algorithm.

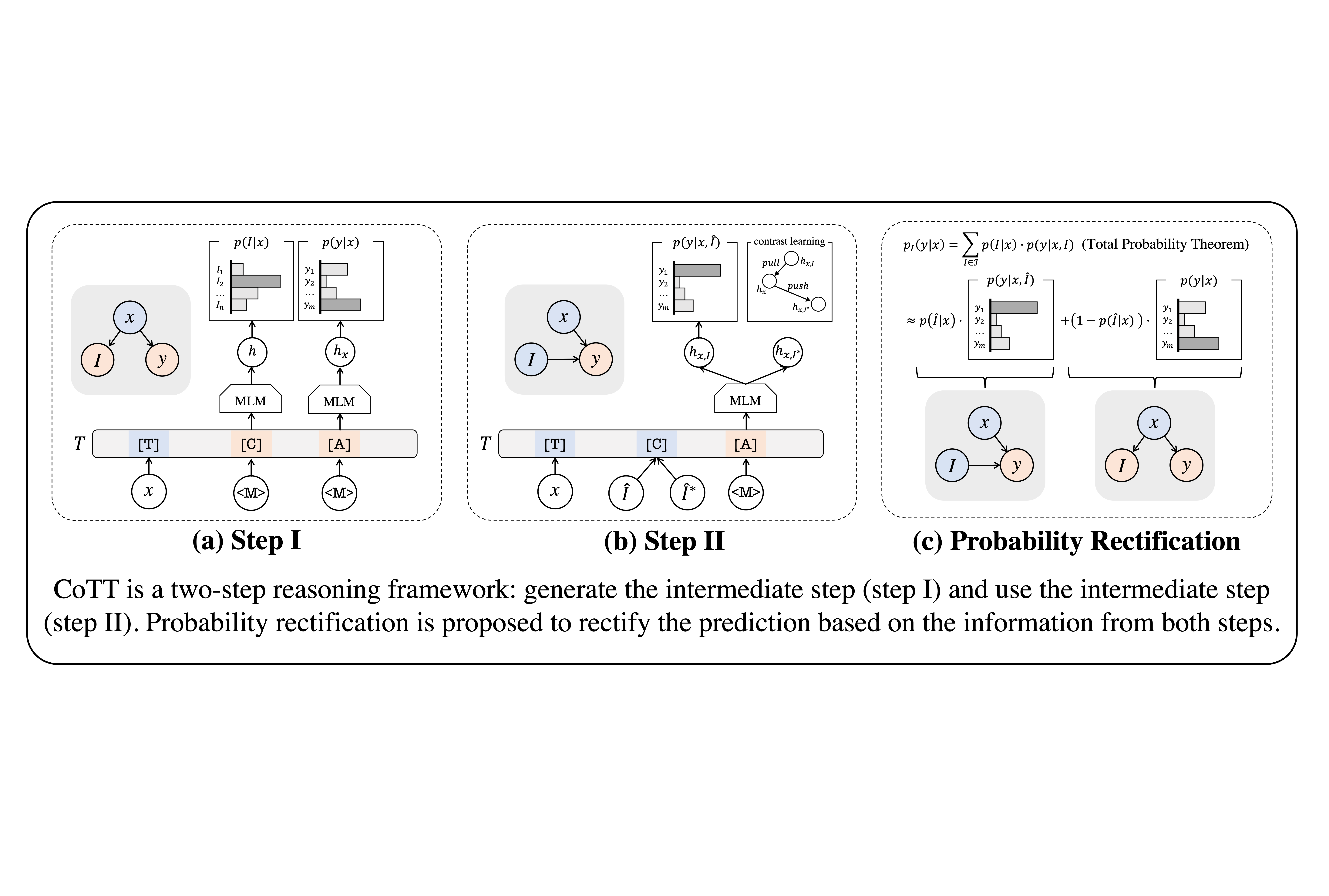

Caoyun Fan, Jidong Tian, Yitian Li, Wenqing Chen, Hao He, Yaohui Jin. "Chain-of-Thought Tuning: Masked Language Models can also Think Step By Step in Natural Language Understanding", EMNLP 2023 (CCF-B). [PDF], TL,DR: We propose Chain-of-Thought Tuning (CoTT), a two-step reasoning framework based on prompt tuning to implement step-by-step thinking for MLMs on NLU tasks.

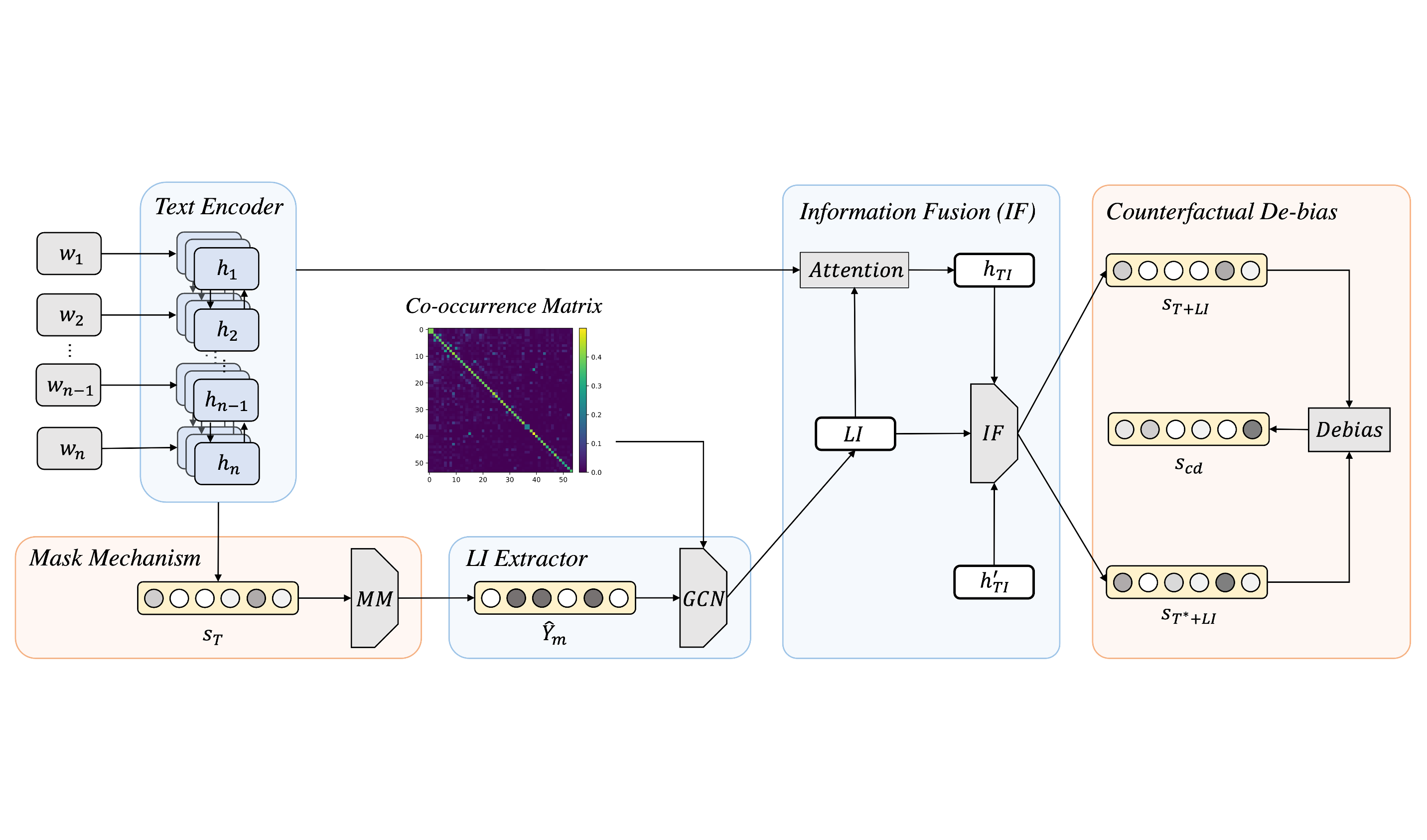

Caoyun Fan, Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin. "Accurate Use of Label Dependency in Multi-Label Text Classification Through the Lens of Causality", Applied Intelligence 2023 (SCI 二区,IF:5.3). [PDF], TL,DR: We find that language models exploit the correlation shortcut in label dependencies in multi-label text classification, and propose a CounterFactual Text Classifier (CFTC) to help language models make causality-based predictions.

Caoyun Fan, Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin. "Improving the Out-Of-Distribution Generalization Capability of Language Models: Counterfactually-Augmented Data is not Enough", ICASSP 2023 ORAL (11.8%) (CCF-B). [PDF], TL,DR: We attribute the inefficiency of Counterfactually-Augmented Data (CAD) in Out-Of-Distribution generalization to “Myopia Phenomenon” and propose two constraints to unlock the potential of CAD.

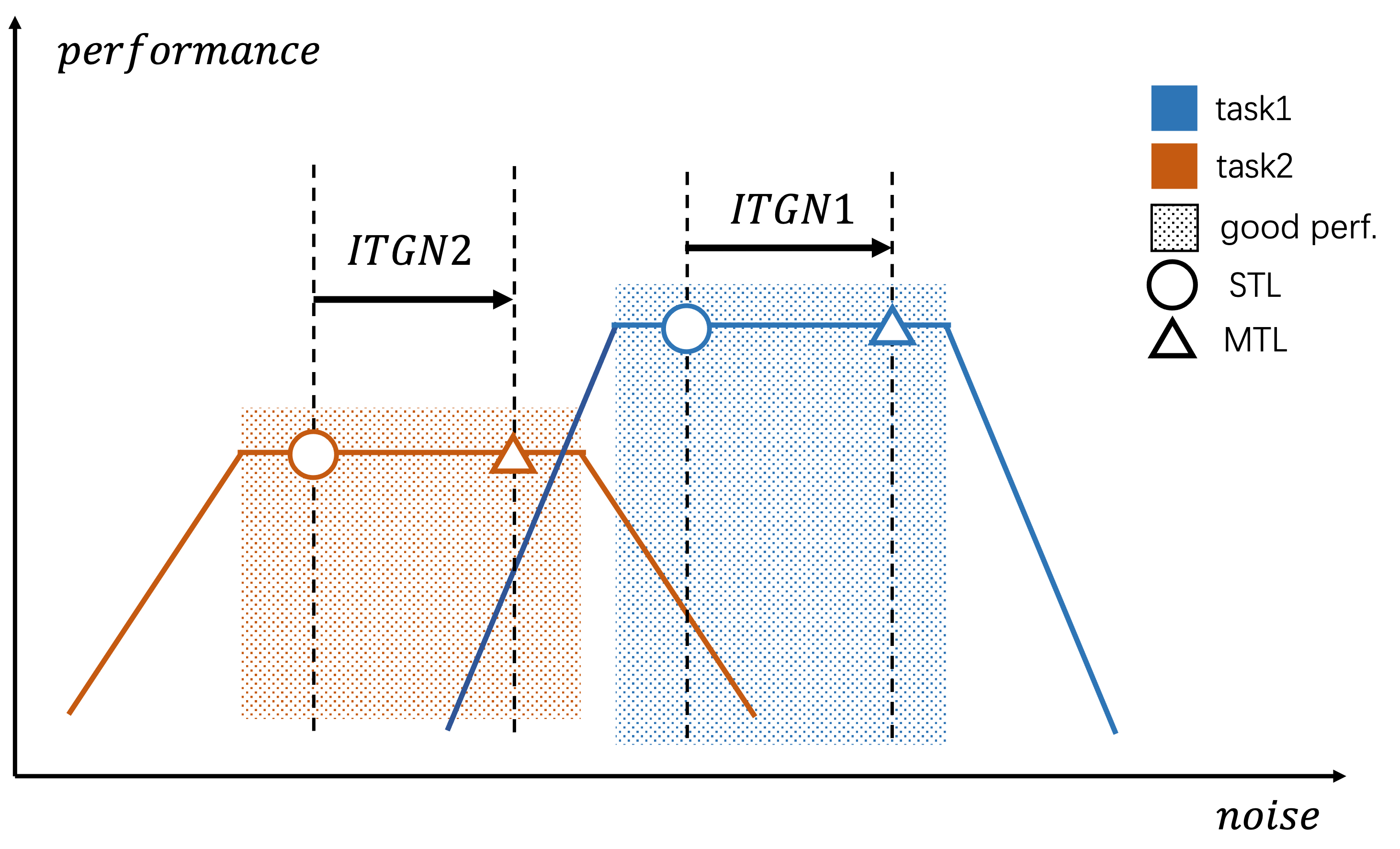

Caoyun Fan, Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin. "MaxGNR: A Dynamic Weight Strategy via Maximizing Gradient-to-Noise Ratio for Multi-Task Learning", ACCV 2022 (CCF-C). [PDF], TL,DR: We attribute a dynamic weight strategy via maximizing Gradient-to-Noise Ratio in multi-task learning.

共同作者论文

Yitian Li, Jidong Tian, Caoyun Fan, Wenqing Chen, Hao He, Yaohui Jin. "MTR: A Dataset Fusing Inductive, Deductive, and Defeasible Reasoning", Findings of ACL 2023 (CCF-A). [PDF]

Jidong Tian, Wenqing Chen, Yitian Li, Caoyun Fan, Hao He, Yaohui Jin. "Latent constraints on unsupervised text-graph alignment with information asymmetry", AAAI 2023 (CCF-A). [PDF]

Wenqing Chen, Jidong Tian, Caoyun Fan, Yitian Li, Hao He, Yaohui Jin. "Preference-controlled multi-objective reinforcement learning for conditional text generation", AAAI 2023 (CCF-A). [PDF]

Yitian Li, Jidong Tian, Wenqing Chen, Caoyun Fan, Hao He, Yaohui Jin. "To What Extent Do Natural Language Understanding Datasets Correlate to Logical Reasoning? A Method for Diagnosing Logical Reasoning", COLING 2022 (CCF-B). [PDF]

Wenqing Chen, Jidong Tian, Caoyun Fan, Hao He, Yaohui Jin. "Dependent multi-task learning with causal intervention for image captioning", IJCAI 2021 (CCF-A). [PDF]